AI and LLMs

Right use of AI - is there such a thing?

I just never seem to get time to sit, focus, and write. Pretty much anything on my mind, more so a complex topic like AI and Large Language Models (LLM).

I honestly think it's also a case of 'too late'. I should have been throwing words about the topic at the blog like ChatGPT carelessly throws words at every prompt it gets.

The problem is that, unlike ChatGPT, I actually cared beforehand to make the words both coherent and matter.

So why am I here? Three things in a row in one week....

I subscribe to an AI newsletter. To keep up with what's going on in the space. I am a geek after all, and I also want to help people make sense of this stuff that gets jammed down our throats. One example is that our minister will sometimes ask me to put together a short message (called a sermonette) for our church on a technical topic and help people make sense of it from a broader perspective.

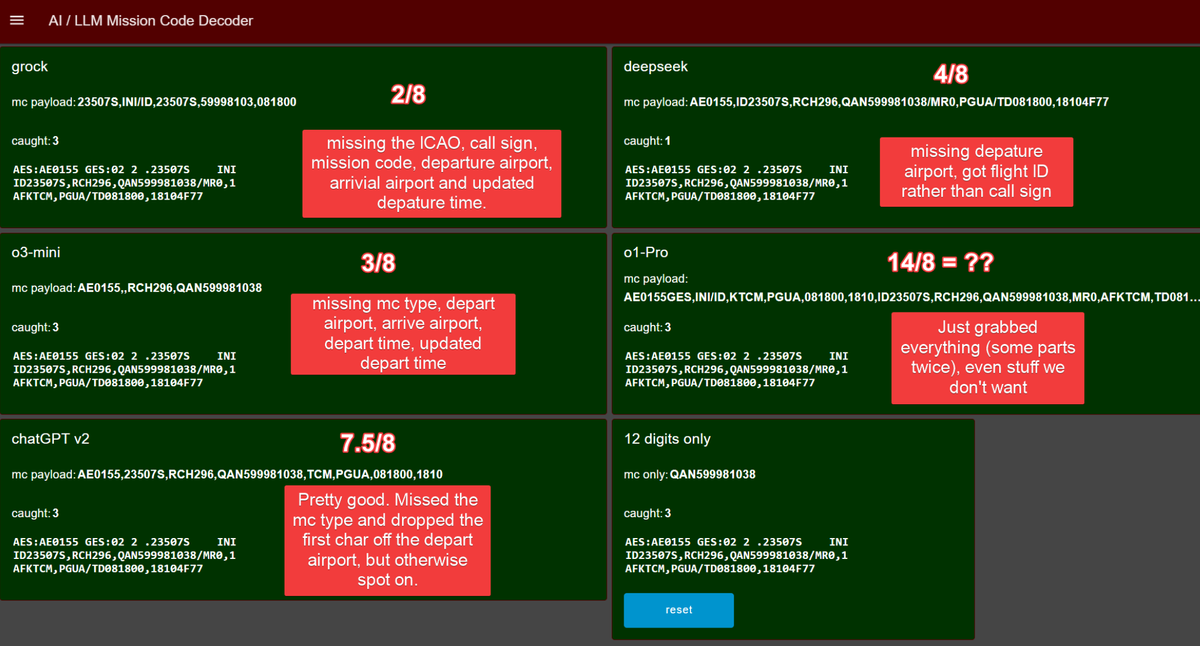

I'm also looking for a leg up in my 'coding' on my ACARS aircraft tracking website.This week's newsletter had two blurbs. I won't link the URLs, but here is #1.

New research by Google: gen AI hits 98% enterprise adoption, but data quality and security concerns linger.

Google Cloud's survey of 500+ global technology leaders validates what everyone is feeling: generative AI is now mainstream (sorry AI hipsters), and it's present at the vast majority of larger organizations.

However, not all is smooth sailing, and many are still figuring out the best way to build an AI-ready cloud that's robust, secure, and cost-effective.

So firstly, it really hit me... "Huh, so I'm in the 2%". And the interesting thing about that fact is that I try. I honestly do try, probably about every 2 weeks, I try and use an AI for some task that I'm told 'this will be perfect for AI'... And the results are just sooooo horrible it takes me two weeks to get over the lame experience.

I don't even get to the security concerns stage. My questions / prompts are so basic and bland that there are no security concerns at all at this point of my attempts.

Ok, newsletter point #2

Claude 3.5 Sonnet significantly outperformed human persuaders in a controlled experiment where both attempted to influence participants' answers on quiz questions. Claude achieved 7.6% higher success rates when trying to convince participants to select specific answers and was more effective both at promoting correct answers (+12.2%) and incorrect answers (-15.1%).

Someone thought to see if AI can sway the way people think - duh.

What it caused me to do was to think about my goal of using AI and whether it is actually going to be helpful to the end user - I am not trying to use AI for me, but for my ACARS aircraft website users. I want them to have a better, more accurate, meaningful, and helpful experience on their website... Is the AI I build to that end going to be factual or persuasive?

To that, then, is the last thought I have.

To introduce that topic, I did an ACARS blog on it... Worth a read if you are interested in 'right use of AI, LLMs and ACARS'.

https://k6thebaldgeek.blogspot.com/2025/05/guest-blog-elbasatguy-ai-llm.html